Like Diogenes1 I’ve been wandering around from Puppet user to Puppet user asking about the questions I brought up in the first post in this series. It seemed like such a sub-optimal hole in the workflow for the standard use case, I couldn’t believe others weren’t seeing and addressing it with some best practice I hadn’t heard about yet.

One of the people who was kind enough to suffer my investigation was was Eric Shamow, Manager of the System Operations Group at Advance Internet. He even tolerated this during the Q&A for his excellent talk at the PICC 2011 conference. Eric introduced me to Nigel Kersten at Puppet Labs who graciously agreed to participate in the discussion about workflow Eric and I were having.

One of the things that came out of that discussion2 was an idea that came the closest to any I’ve heard for addressing my workflow concerns. I’d like to tell you about it now by paraphrasing what Nigel suggested in my own words. Any errors in the following are mine, any cool ideas found there should be attributed to Nigel.

For this explanation, I’m going to bring back some of the stellar diagram artwork from the first post in the series. My artistic skills haven’t really improved since that post, so apologies in advance.

The Big Idea

Let’s review the cast of characters from our first post:

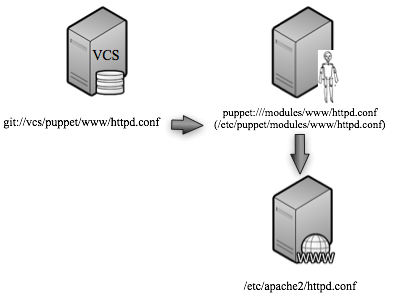

We have a web server whose configuration is managed by Puppet. The files seen and served by the Puppet server are all kept in a version control system of some sort.

We have a web server whose configuration is managed by Puppet. The files seen and served by the Puppet server are all kept in a version control system of some sort.

Now let me introduce you to the new members that makes this all work:

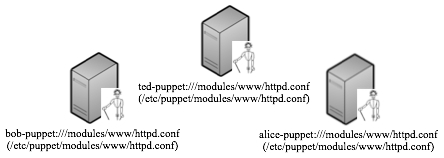

Nigel calls these “development Puppet servers” but I’m more partial to the name “personal Puppet servers.” because I think it makes the concept even clearer. Here’s the idea:

Everyone on the team gets their own personal Puppet server. When a team member wants to work on the web server’s configuration, she:

- makes a clone of the main Puppet server’s node configuration (e.g. its /etc/puppet/modules directory).

- re-homes the Puppet client on the web server to point to her personal Puppet server.

- works on the web server’s configuration as it is kept on her personal Puppet server, changing it, pushing it to the web server and testing it until it is just the way she wants it.

- pushes the changes she made on her personal Puppet server to the main Puppet server3.

- re-homes the web server’s Puppet client back to the main Puppet server.

Let’s see this process in pictures before we look at this solution in more detail:

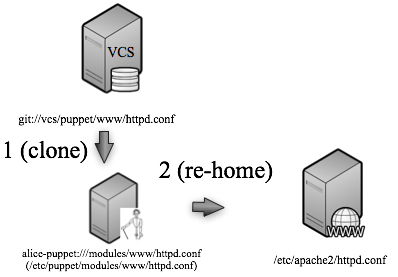

First we clone the configuration information from the main Puppet server to our personal Puppet server. Then we tell the Puppet client on the web server to look to our Puppet server instead of the “real” one for its configuration.

[Now pretend I’ve inserted a picture here of a sysadmin/devop furiously editing the web server config on a personal Puppet server, pulling it from that server to the web server, reloading the config on the web server, and repeating until happy with the changes.]

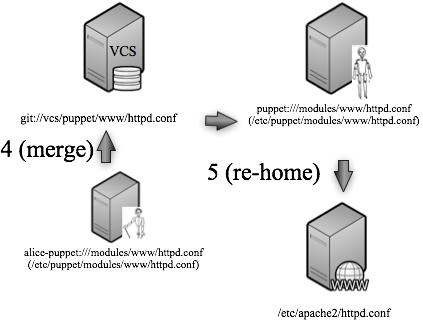

Finally, we merge our changes back to the main VCS repository, re-home the web server back to the “real” Puppet server, and everything is copacetic:

Pros and Cons

So what do I like so much about this solution?

- Once you’ve re-homed to your personal Puppet server, you can change, push, and test your new config in a very tight, fast, and lightweight loop. Given this addresses my major concern, this solution is already a win with the rest being gravy.

- That loop uses the same process to generate and distribute configs as the “normal” Puppet process. If your main Puppet server’s config does a bunch of fancy templatey, databasey, Ruby mangley4 things to produce the final web server configuration, they will all be present and tested during the “editing/development” process. This means you are much less likely to have to debug the Puppet part of the config after it gets to the real Puppet server5.

- The editing loop can be customized to the person who is doing the changing. If they want to be highly fastidious in their use of a VCS locally on their personal Puppet server, topic-branching, merging and rebasing like a madman, great. If they want to skip that and just change files willy-nilly, fine. Both types of people will have to “do the right thing” when they merge their changes to the main VCS repository.

- There is no longer a need to have a “personal” environment6. Instead of switching in and out of /etc/puppet/mypersonalenvironment/modules (and keeping that in sync with the target directory), all of the team members can make their changes in their target environment (/etc/puppet/modules, or /etc/puppet/development/modules, etc.) on their local machine. This is one less context shift during the process and one less thing each person has to keep alive in their head7.

What’s not to love about this idea?

- More moving parts. You have to be able to stand up more Puppet servers easily (though Nigel points out that standing up a Puppet server for just a few nodes, providing all of the Puppet dependencies are installed, is just a matter of typing:

puppet master –verbose –no-daemonize –<other settings> ). - Your main Puppet infrastructure and configs have to be engineered with portability in mind. I was hand-waving when I said the personal Puppet server would behave in the exact same way as the production master. That’s only true if you haven’t created a configuration that isn’t tightly tied to a specific machine (e.g. the database backend only accepts connections from localhost). If a clone of the configs would break on any every machine besides the master, that’s a problem.

- The other place I put on a pair of rose colored glasses was when I asserted that you would not have to debug the Puppet config when it got the real server. There are lots of issues that could crop up when a config is deployed at scale that you wouldn’t necessarily see with one Puppet client or working on a single web server. You may make a change that ripples out to more machines than you anticipate8 I think this proposed setup helps eliminate one tier of potential failures, but there’s always another one above it.

- This idea doesn’t necessarily remove the VCS-related complexities I whined about in my last post. Merging, branches, etc, all may still be a fun ball of wax. Similarly, there’s no real protection between two people making mutually incompatible changes to different parts of the web server. Not really news, but I thought I should cop to it anyway.

- There’s a definite lack of granularity here. Once I re-home a machine to my personal Puppet server, the entire machine stops getting configuration updates from anyone but me. If I’m cool and refresh my Puppet server’s clone periodically, that helps some, but we’re still fundamentally dealing with things on a per-machine level. It would be nice to be able to re-home just a portion of a machine’s configuration. I’ve never heard of a Puppet client receiving information from more than one Puppet server, but I bet you could fake it. One possible but potentially icky method would be to go back to something similar to the ideas in the Pro Puppet book. You could construct a directory tree from the amalgamation of several different VCS repositories. My assertion is it would be better to build this functionality into the Puppet layer vs. trying to cobble together something using a VCS tool.

- There are potentially some security questions around SSL certificates. See the details section below.

- Someone might forget and (or intentionally) leave the web server re-homed to the wrong place. Ideally you would have monitoring in place to scream should this happen.

Details, Details

There are a number of implementation details, some obvious, some a little more hidden that need to be solved to put a scheme like the one I’ve described together. For example, Puppet’s SSL/Certificate authority stuff is going to have to come into play. In the initial setup with only a single Puppet production master, all of the clients have presumably already been authorized to use that server. That’s the whole puppetca -s stuff we go through to have the client’s certificates signed for the server. You have to handle a similar situation for each personal Puppet server9.

There are other questions, like where do you host the personal Puppet servers (on an individual workstation?, a new VM you spin up for a person in a central farm?, perhaps on your laptop using Vagrant, etc.?), how do make sure you have the necessary bits on disk for Puppet to run?10, are the firewall rules ok? (which machines are allowed to talk to which Puppet servers) and so on. I’m sure you can think of more.

And Now Ladies and Gentlemen, The Future

There are three sets of futures to discuss:

- My future — we’re going to try using this setup on our initial deploy at $WORK unless we hit some showstopper on the way. I can try and report back on our experiences if anyone would find that valuable.

- Puppet’s future — discussing this in greater depth with Nigel and Eric, it seems like there is a healthy eagerness on the part of Puppet Labs and the rest of the community to wrestle with problems like these. I’ve used the word “workflow” throughout these posts, but I’m painfully aware that I have just touched the very surface of the topic. I believe the tool has the potential to evolve to make some of the sorts of stuff I’ve discussed here easier to pull off11. And further workflow-related additions are on the way.

- Our future together — I realize that these three blog posts might have an “asked, then answered” story arc, but I think the questions around how you use a configuration management tool like Puppet within a larger context are far from being exhausted. I hope you’ll comment here or keep bringing questions like this to the mailing lists for those tools. Please tell me how you are addressing these sort of issues.

- though I’d like to think a little nicer. He seemed like quite a character. [↩]

- and it is possible I’ll post more here from it in future episodes [↩]

- or more precisely, to the VCS system that feeds the main Puppet server [↩]

- ok, I will stop now [↩]

- I realize there is a bit of handwaving here, more on that in the cons section [↩]

- in the Puppet sense of the word. This is essentially a person’s own directory tree within the /etc/puppet module directory [↩]

- and hey, if you like using a ton of personal environments, there is nothing about this solution that precludes you from doing so [↩]

- Luke’s talk here suggests they are working on or are going to work on better tools to let you understand the scope of Puppet config changes. [↩]

- maybe you can make it easier on yourself by using autosign, caveat implementor, or pre-generating certs. I haven’t thought more about this but I wonder if you could perform some slight-of-hand using interface aliases/CNAMES [↩]

- the Pro Puppet book suggests you can manage Puppet with Puppet which sounds peachy to me [↩]

- I like it when a tool makes it easy to “do the right thing,” i.e. follow best practices. [↩]

{ 0 comments… add one now }